The purpose of this workshop is to explore some issues in the analysis of survey data using Stata 17. Before we begin, you will want to be sure that your copy of Stata is up-to-date. To do this, please type

update all

in the Stata command window and follow any instructions given. These updates include not only fixes to known bugs, but also add some new features that may be useful.

Before we begin looking at examples in Stata, we will quickly review some basic issues and concepts in survey data analysis.

NOTE: Most of the commands in this seminar will work with Stata versions 12-16.

Why do we need survey data analysis software?

Regular procedures in statistical software (that is not designed for survey data) analyzes data as if the data were collected using simple random sampling. For experimental and quasi-experimental designs, this is exactly what we want. However, very few surveys use a simple random sample to collect data. Not only is it nearly impossible to do so, but it is not as efficient (either financially and statistically) as other sampling methods. When any sampling method other than simple random sampling is used, we usually need to use survey data analysis software to take into account the differences between the design that was used to collect the data and simple random sampling. This is because the sampling design affects both the calculation of the point estimates and the standard errors of those estimates. If you ignore the sampling design, e.g., if you assume simple random sampling when another type of sampling design was used, both the point estimates and their standard errors will likely be calculated incorrectly. The sampling weight will affect the calculation of the point estimate, and the stratification and/or clustering will affect the calculation of the standard errors. Ignoring the clustering will likely lead to standard errors that are underestimated, possibly leading to results that seem to be statistically significant, when in fact, they are not. The difference in point estimates and standard errors obtained using non-survey software and survey software with the design properly specified will vary from data set to data set, and even between analyses using the same data set. While it may be possible to get reasonably accurate results using non-survey software, there is no practical way to know beforehand how far off the results from non-survey software will be.

Sampling designs

Most people do not conduct their own surveys. Rather, they use survey data that some agency or company collected and made available to the public. The documentation must be read carefully to find out what kind of sampling design was used to collect the data. This is very important because many of the estimates and standard errors are calculated differently for the different sampling designs. Hence, if you mis-specify the sampling design, the point estimates and standard errors will likely be wrong.

Below are some common features of many sampling designs.

Sampling weights: There are several types of weights that can be associated with a survey. Perhaps the most common is the sampling weight. A sampling weight is a probability weight that has had one or more adjustments made to it. Both a sampling weight and a probability weight are used to weight the sample back to the population from which the sample was drawn. By definition, a probability weight is the inverse of the probability of being included in the sample due to the sampling design (except for a certainty PSU, see below). The probability weight, called a pweight in Stata, is calculated as N/n, where N = the number of elements in the population and n = the number of elements in the sample. For example, if a population has 10 elements and 3 are sampled at random with replacement, then the probability weight would be 10/3 = 3.33. In a two-stage design, the probability weight is calculated as f1f2, which means that the inverse of the sampling fraction for the first stage is multiplied by the inverse of the sampling fraction for the second stage. Under many sampling plans, the sum of the probability weights will equal the population total.

While many textbooks will end their discussion of probability weights here, this definition does not fully describe the sampling weights that are included with actual survey data sets. Rather, the sampling weight, which is sometimes called a “final weight,” starts with the inverse of the sampling fraction, but then incorporates several other values, such as corrections for unit non-response, errors in the sampling frame (sometimes called non-coverage), calibration and trimming. Because these other values are included in the probability weight that is included with the data set, it is often inadvisable to modify the sampling weights, such as trying to standardize them for a particular variable, e.g., age.

Strata: Stratification is a method of breaking up the population into different groups, often by demographic variables such as gender, race or SES. Each element in the population must belong to one, and only one, strata. Once the strata have been defined, samples are taken from each stratum as if it were independent of all of the other strata. For example, if a sample is to be stratified on gender, men and women would be sampled independently of one another. This means that the probability weights for men will likely be different from the probability weights for the women. In most cases, you need to have two or more PSUs in each stratum. The purpose of stratification is to reduce the standard error of the estimates, and stratification works most effectively when the variance of the dependent variable is smaller within the strata than in the sample as a whole.

PSU: This is the primary sampling unit. This is the first unit that is sampled in the design. For example, school districts from California may be sampled and then schools within districts may be sampled. The school district would be the PSU. If states from the US were sampled, and then school districts from within each state, and then schools from within each district, then states would be the PSU. One does not need to use the same sampling method at all levels of sampling. For example, probability-proportional-to-size sampling may be used at level 1 (to select states), while cluster sampling is used at level 2 (to select school districts). In the case of a simple random sample, the PSUs and the elementary units are the same. In general, accounting for the clustering in the data (i.e., using the PSUs), will increase the standard errors of the point estimates. Conversely, ignoring the PSUs will tend to yield standard errors that are too small, leading to false positives when doing significance tests.

FPC: This is the finite population correction. This is used when the sampling fraction (the number of elements or respondents sampled relative to the population) becomes large. The FPC is used in the calculation of the standard error of the estimate. If the value of the FPC is close to 1, it will have little impact and can be safely ignored. In some survey data analysis programs, such as SUDAAN, this information will be needed if you specify that the data were collected without replacement (see below for a definition of “without replacement”). The formula for calculating the FPC is ((N-n)/(N-1))1/2, where N is the number of elements in the population and n is the number of elements in the sample. To see the impact of the FPC for samples of various proportions, suppose that you had a population of 10,000 elements.

Sample size (n) FPC 1 1.0000 10 .9995 100 .9950 500 .9747 1000 .9487 5000 .7071 9000 .3162

Replicate weights: Replicate weights are a series of weight variables that are used to correct the standard errors for the sampling plan. They serve the same function as the PSU and strata variables (which are used a Taylor series linearization) to correct the standard errors of the estimates for the sampling design. Many public use data sets are now being released with replicate weights instead of PSUs and strata in an effort to more securely protect the identity of the respondents. In theory, the same standard errors will be obtained using either the PSU and strata or the replicate weights. There are different ways of creating replicate weights; the method used is determined by the sampling plan. The most common are balanced repeated and jackknife replicate weights. You will need to read the documentation for the survey data set carefully to learn what type of replicate weight is included in the data set; specifying the wrong type of replicate weight will likely lead to incorrect standard errors. For more information on replicate weights, please see Stata Library: Replicate Weights and Appendix D of the WesVar Manual by Westat, Inc. Several statistical packages, including Stata, SAS, R, Mplus, SUDAAN and WesVar, allow the use of replicate weights.

Consequences of not using the design elements

Sampling design elements include the sampling weights, post-stratification weights (if provided), PSUs, strata, and replicate weights. Rarely are all of these elements included in a single public-use data set. However, ignoring the design elements that are included can often lead to inaccurate point estimates and/or inaccurate standard errors.

Sampling with and without replacement

Most samples collected in the real world are collected “without replacement”. This means that once a respondent has been selected to be in the sample and has participated in the survey, that particular respondent cannot be selected again to be in the sample. Many of the calculations change depending on if a sample is collected with or without replacement. Hence, programs like SUDAAN request that you specify if a survey sampling design was implemented with our without replacement, and an FPC is used if sampling without replacement is used, even if the value of the FPC is very close to one.

Examples

For the examples in this workshop, we will use the data set from NHANES (the National Health and Nutrition Examination Survey) that comes with Stata.

Reading the documentation

The first step in analyzing any survey data set is to read the documentation. With many of the public use data sets, the documentation can be quite extensive and sometimes even intimidating. Instead of trying to read the documentation “cover to cover”, there are some parts you will want to focus on. First, read the Introduction. This is usually an “easy read” and will orient you to the survey. There is usually a section or chapter called something like “Sample Design and Analysis Guidelines”, “Variance Estimation”, etc. This is the part that tells you about the design elements included with the survey and how to use them. Some even give example code. If multiple sampling weights have been included in the data set, there will be some instruction about when to use which one. If there is a section or chapter on missing data or imputation, please read that. This will tell you how missing data were handled. You should also read any documentation regarding the specific variables that you intend to use. As we will see little later on, we will need to look at the documentation to get the value labels for the variables. This is especially important because some of the values are actually missing data codes, and you need to do something so that Stata doesn’t treat those as valid values (or you will get some very “interesting” means, totals, etc.).

Getting NHANES data into Stata

For the examples in this workshop, we will be using a version of the NHANES II dataset that comes with Stata. However, for real analyses, you need to download data from the NHANES website. The following commands can be used to open the NHANES data in Stata and save them in Stata format. I have also sorted the data before saving them (because I will merge the files), but this is not technically necessary.

* demographics clear fdause "D:\Data\Seminars\Applied Survey Stata 17\demo_g.xpt" sort seqn save "D:\Data\Seminars\Applied Survey Stata 17\demo_g", replace

The do-file that imports the data, merges the files and recodes the variables can be found here. The data file created by this do-file is not the data file used in this workshop. It is linked here to provide an example of how NHANES data can be imported into Stata, cleaned and merged to create a working data file.

The svyset command

Before we can start our analyses, we need to issue the svyset command. The svyset command tells Stata about the design elements in the survey. Once this command has been issued, all you need to do for your analyses is use the svy: prefix before each command. Because the 2011-2012 NHANES data were released with a sampling weight (wtint2yr), a PSU variable (sdmvpsu) and a strata variable (sdmvstra), we will use these our svyset command. The svyset command looks like this:

use https://www.stata-press.com/data/r17/nhanes2f, clear svyset psuid [pweight=finalwgt], strata(stratid) singleunit(centered)Sampling weights: finalwgt VCE: linearized Single unit: centered Strata 1: stratid Sampling unit 1: psuid FPC 1:

The singleunit option was added in Stata 10. This option allows for different ways of handling a single PSU in a stratum. There are usually two or more PSUs in each stratum. If there is only one PSU in stratum, due to missing data or a subpopulation specification, for example, the variance cannot be estimated in that stratum. If you use the default option, missing, then you will get no standard errors when Stata encounters a single PSU in a stratum. There are three other options. One is certainty, meaning that the singleton PSUs be treated as certainty PSUs; certainty PSUs are PSUs that were selected into the sample with a probability of 1 (in other words, these PSUs were certain to be in the sample) and do not contribute to the standard error. The scaled option gives a scaled version of the certainty option. The scaling factor comes from using the average of the variances from the strata with multiple sampling units for each stratum with one PSU. The centered option centers strata with one sampling unit at the grand mean instead of the stratum mean.

Now that we have issued the svyset command, we can use the svydescribe command to get information on the strata and PSUs. It is a good idea to use this command to learn about the strata and PSUs in the dataset.

svydescribeSurvey: Describing stage 1 sampling units Sampling weights: finalwgt VCE: linearized Single unit: centered Strata 1: stratid Sampling unit 1: psuid FPC 1: Number of obs per unit Stratum # units # obs Min Mean Max ---------------------------------------------------------- 1 2 380 165 190.0 215 2 2 185 67 92.5 118 3 2 348 149 174.0 199 4 2 460 229 230.0 231 5 2 252 105 126.0 147 6 2 298 131 149.0 167 7 2 476 206 238.0 270 8 2 337 158 168.5 179 9 2 243 100 121.5 143 10 2 262 119 131.0 143 11 2 275 120 137.5 155 12 2 314 144 157.0 170 13 2 342 154 171.0 188 14 2 405 200 202.5 205 15 2 380 189 190.0 191 16 2 336 159 168.0 177 17 2 393 180 196.5 213 18 2 359 144 179.5 215 20 2 283 125 141.5 158 21 2 213 102 106.5 111 22 2 301 128 150.5 173 23 2 340 158 170.0 182 24 2 434 202 217.0 232 25 2 254 115 127.0 139 26 2 261 129 130.5 132 27 2 283 139 141.5 144 28 2 298 135 149.0 163 29 2 502 215 251.0 287 30 2 365 166 182.5 199 31 2 308 143 154.0 165 32 2 450 211 225.0 239 ---------------------------------------------------------- 31 62 10,337 67 166.7 287

The output above tells us that there are 31 strata with two PSUs in each. There are 62 PSUs. There are a different number of observations in each PSU and in each strata. There are a minimum of 67 observations, a maximum of 287 observations and an average of 166.7 observations in the PSUs. The design degrees of freedom is calculated as the number of strata minus the number of PSUs. For this data set, that is 62-31 = 31.

Descriptive statistics

We will start by calculating some descriptive statistics of some of the continuous variables. We can use the svy: mean command to get the mean of continuous variables, and we can follow that command with the estat sd command to get the standard deviation or variance of the variable. The var option on the estat sd command can be used to get the variance.

svy: mean age

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Design df = 31

--------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

-------------+------------------------------------------------

age | 42.23732 .3034412 41.61844 42.85619

--------------------------------------------------------------

estat sd

-------------------------------------

| Mean Std. dev.

-------------+-----------------------

age | 42.23732 15.50095

-------------------------------------

estat sd, var

-------------------------------------

| Mean Variance

-------------+-----------------------

age | 42.23732 240.2795

-------------------------------------

svy: mean copper

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 9,118

Number of PSUs = 62 Population size = 103,505,700

Design df = 31

--------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

-------------+------------------------------------------------

copper | 124.7232 .6657517 123.3654 126.081

--------------------------------------------------------------

svy: mean hct // hemocrit

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Design df = 31

--------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

-------------+------------------------------------------------

hct | 41.98352 .0762331 41.82804 42.139

--------------------------------------------------------------

Notice that a different number of observations were used in each analysis. This is important, because if you use these three variables in the same call to svy: mean, you will get different estimates of the means for each of the variables. This is because copper has missing values. There are 9118 observations that have valid (AKA non-missing) values for all three variables, and those are used in the calculations of the means when all of the variables are used in the same call to svy: mean.

svy: mean age copper hct

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 9,118

Number of PSUs = 62 Population size = 103,505,700

Design df = 31

--------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

-------------+------------------------------------------------

age | 42.32525 .3239445 41.66457 42.98594

copper | 124.7232 .6657517 123.3654 126.081

hct | 41.97078 .0843598 41.79873 42.14283

--------------------------------------------------------------

Let’s get some descriptive statistics on some of the binary variables, such as female and rural.

svy: mean female

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Design df = 31

--------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

-------------+------------------------------------------------

female | .5204215 .005773 .5086474 .5321956

--------------------------------------------------------------

estat sd

-------------------------------------

| Mean Std. dev.

-------------+-----------------------

female | .5204215 .499607

-------------------------------------

svy: mean rural

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Design df = 31

--------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

-------------+------------------------------------------------

rural | .3174388 .0212654 .2740677 .3608099

--------------------------------------------------------------

estat sd

-------------------------------------

| Mean Std. dev.

-------------+-----------------------

rural | .3174388 .4655023

-------------------------------------

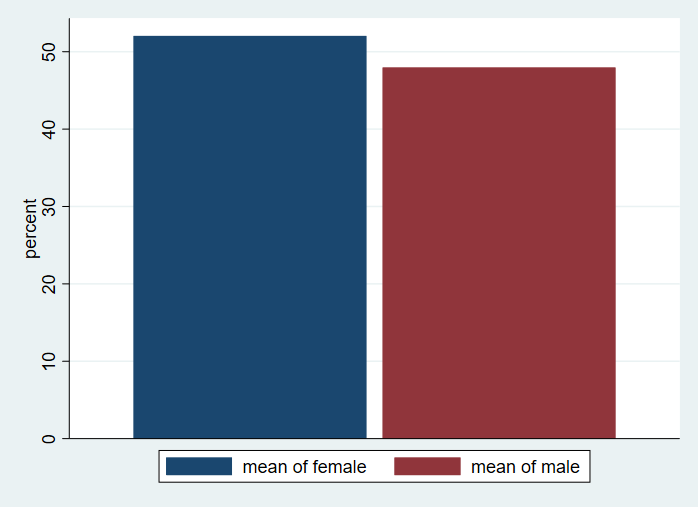

Taking the mean of a variable that is coded 0/1 gives the proportion of 1s. The output above indicates that approximately 52% of the observations in our data set are from females; 31.74% of respondents live in a rural area.

Of course, the svy: tab command can also be used with binary variables. The proportion of .5204 matches the .5204215 that we found with the svy: mean command.

svy: tab female

(running tabulate on estimation sample)

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Design df = 31

----------------------

Female | proportion

----------+-----------

Male | .4796

Female | .5204

|

Total | 1

----------------------

Key: proportion = Cell proportion

By default, svy: tab gives proportions. If you would prefer to see the actual counts, you will need to use the count option. Oftentimes, the counts are too large for the display space in the table, so other options, such cellwidth and format, need to be used to display the counts. The missing option is often useful if the variable has any missing values (the variable female does not).

svy: tab female, missing count cellwidth(15) format(%15.2g)

(running tabulate on estimation sample)

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Design df = 31

---------------------------

Female | count

----------+----------------

Male | 56122035

Female | 60901624

|

Total | 117023659

---------------------------

Key: count = Weighted count

svy: tab female rural, missing count cellwidth(15) format(%15.2g)

(running tabulate on estimation sample)

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Design df = 31

-------------------------------------------------------------

| Rural

Female | Urban Rural Total

----------+--------------------------------------------------

Male | 37327257 18794778 56122035

Female | 42548556 18353068 60901624

|

Total | 79875813 37147846 117023659

-------------------------------------------------------------

Key: Weighted count

Pearson:

Uncorrected chi2(1) = 13.3910

Design-based F(1, 31) = 12.3092 P = 0.0014

Using the col option with svy: tab will give the column proportions. As you can see, the values in the column “Total” are the same as those from svy: tab female.

svy: tab female rural, col

(running tabulate on estimation sample)

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Design df = 31

-------------------------------

| Rural

Female | Urban Rural Total

----------+--------------------

Male | .4673 .5059 .4796

Female | .5327 .4941 .5204

|

Total | 1 1 1

-------------------------------

Key: Column proportion

Pearson:

Uncorrected chi2(1) = 13.3910

Design-based F(1, 31) = 12.3092 P = 0.0014

Of course, the svy: proportion command can also be used to get proportions.

svy: proportion female

(running proportion on estimation sample)

Survey: Proportion estimation

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Design df = 31

--------------------------------------------------------------

| Linearized Logit

| Proportion std. err. [95% conf. interval]

-------------+------------------------------------------------

female |

Male | .4795785 .005773 .4678179 .4913618

Female | .5204215 .005773 .5086382 .5321821

--------------------------------------------------------------

Using the estat post-estimation command after the svy: mean command also allows you to get the design effects, misspecification effects, unweighted and weighted sample sizes, or the coefficient of variation.

The Deff and the Deft are types of design effects, which tell you about the efficiency of your sample. The Deff is a ratio of two variances. In the numerator we have the variance estimate from the current sample (including all of its design elements), and in the denominator we have the variance from a hypothetical sample of the same size drawn as an SRS. In other words, the Deff tells you how efficient your sample is compared to an SRS of equal size. If the Deff is less than 1, your sample is more efficient than SRS; usually the Deff is greater than 1. The Deft is the ratio of two standard error estimates. Again, the numerator is the standard error estimate from the current sample. The denominator is a hypothetical SRS (with replacement) standard error from a sample of the same size as the current sample.

You can also use the meff and the meft option to get the misspecification effects. Misspecification effects are a ratio of the variance estimate from the current analysis to a hypothetical variance estimated from a misspecified model. Please see the Stata documentation for more details on how these are calculated.

The coefficient of variation is the ratio of the standard error to the mean, multiplied by 100% (see pages 38-40 of the Stata 17 svy manual). It is an indication of the variability relative to the mean in the population and is not affected by the unit of measurement of the variable.

svy: mean age (running mean on estimation sample) Survey: Mean estimation Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 -------------------------------------------------------------- | Linearized | Mean std. err. [95% conf. interval] -------------+------------------------------------------------ age | 42.23732 .3034412 41.61844 42.85619 -------------------------------------------------------------- estat effects ---------------------------------------------------------- | Linearized | Mean std. err. DEFF DEFT -------------+-------------------------------------------- age | 42.23732 .3034412 3.9612 1.99028 ---------------------------------------------------------- estat effects, deff deft meff meft ------------------------------------------------------------------------------ | Linearized | Mean std. err. DEFF DEFT MEFF MEFT -------------+---------------------------------------------------------------- age | 42.23732 .3034412 3.9612 1.99028 3.211 1.79193 ------------------------------------------------------------------------------ estat size ---------------------------------------------------------------------- | Linearized | Mean std. err. Obs Size -------------+-------------------------------------------------------- age | 42.23732 .3034412 10,337 117,023,659 ---------------------------------------------------------------------- estat cv ------------------------------------------------ | Linearized | Mean std. err. CV (%) -------------+---------------------------------- age | 42.23732 .3034412 .71842 ------------------------------------------------display (.3034412/42.23732)*100 .71841963

Analysis of subpopulations

Before we continue with our descriptive statistics, we should pause to discuss the analysis of subpopulations. The analysis of subpopulations is one place where survey data and experimental data are quite different. If you have data from an experiment (or quasi-experiment), and you want to analyze the responses from, say, just the women, or just people over age 50, you can just delete the unwanted cases from the data set or use the by: prefix. Complex survey data are different. With survey data, you (almost) never get to delete any cases from the data set, even if you will never use them in any of your analyses. Because of the way the -by- prefix works, you usually don’t use it with survey data either. (For an excellent discussion of conditional versus unconditional subdomain, please this presentation by Brady West, Ph.D.) Instead, Stata has provided two options that allow you to correctly analyze subpopulations of your survey data. These options are subpop and over. The subpop option is sort of like deleting unwanted cases (without really deleting them, of course), and the over option is very similar to -by- processing in that the output contains the estimates for each group. We will start with some examples of the subpop option.

First, however, let’s take a second to see why deleting cases from a survey data set can be so problematic. If the data set is subset (meaning that observations not to be included in the subpopulation are deleted from the data set), the standard errors of the estimates cannot be calculated correctly. When the subpopulation option is used, only the cases defined by the subpopulation are used in the calculation of the estimate, but all cases are used in the calculation of the standard errors. For more information on this issue, please see Sampling Techniques, Third Edition by William G. Cochran (1977) and Small Area Estimation by J. N. K. Rao (2003). Also, if you look in the Stata 17 svy manual, you will find an entire section (pages 67-72) dedicated to the analysis of subpopulations. The formulas for using both if and subpop are given, along with an explanation of how they are different. If you look at the help for any svy: command, you will see the same warning:

Warning: Use of if or in restrictions will not produce correct variance

estimates for subpopulations in many cases. To compute estimates for

subpopulations, use the subpop() option. The full specification for subpop()

is

subpop([varname] [if])

The subpop option on the svy: prefix without the if is used with binary variables. (Technically, all cases coded as not 0 and not missing are part of the subpopulation; this means that if your subpopulation variable has values of 1 and 2, all of the observations will be included in the subpopulation unless you use a different syntax.) You may need to create a binary variable (using the generate command) in which all of the observations be to included in the subpopulation are coded as 1 and all other observations are coded as 0. For our example, we will use the variable female for our subpopulation variable, so that only females will be included in calculation of the point estimate.

svy: mean age

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Design df = 31

--------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

-------------+------------------------------------------------

age | 42.23732 .3034412 41.61844 42.85619

--------------------------------------------------------------

svy, subpop(female): mean age

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Subpop. no. obs = 5,428

Subpop. size = 60,901,624

Design df = 31

--------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

-------------+------------------------------------------------

age | 42.5498 .3717231 41.79166 43.30793

--------------------------------------------------------------

To get the mean for the males, we can specify the subpopulation as the variable female not equal to 1 (meaning the observations that are coded 0).

svy, subpop(if female != 1): mean age

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Subpop. no. obs = 4,909

Subpop. size = 56,122,035

Design df = 31

--------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

-------------+------------------------------------------------

age | 41.89822 .339303 41.20621 42.59024

--------------------------------------------------------------

We can also use the over option, which will give the results for each level of the categorical variable listed. The over option is available only for svy: mean, svy: proportion, svy: ratio and svy: total.

svy, over(female): mean age

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Design df = 31

--------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

-------------+------------------------------------------------

c.age@female |

Male | 41.89822 .339303 41.20621 42.59024

Female | 42.5498 .3717231 41.79166 43.30793

--------------------------------------------------------------

We can include more than one variable with the over option; this will give us results for every combination of the variables listed.

svy, over(health female): mean copper

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 9,116

Number of PSUs = 62 Population size = 103,479,298

Design df = 31

------------------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

-----------------------+------------------------------------------------

c.copper@health#female |

Poor#Male | 125.1899 1.800517 121.5177 128.8621

Poor#Female | 140.3425 2.196568 135.8626 144.8224

Fair#Male | 118.1246 1.288914 115.4958 120.7534

Fair#Female | 137.6154 1.273191 135.0187 140.2121

Average#Male | 113.7033 .9745251 111.7158 115.6909

Average#Female | 140.9529 1.780904 137.3207 144.5851

Good#Male | 108.7135 .6959349 107.2941 110.1328

Good#Female | 137.5177 1.374578 134.7142 140.3211

Excellent#Male | 106.3076 .5571644 105.1712 107.4439

Excellent#Female | 132.7433 1.569208 129.5429 135.9438

------------------------------------------------------------------------

We can also use the subpop option and the over option together. In the example below, we get the mean of copper for females in each combination of race and region (12 levels).

svy, subpop(female): mean copper, over(race region)

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 9,677

Number of PSUs = 62 Population size = 109,683,584

Subpop. no. obs = 4,768

Subpop. size = 53,561,549

Design df = 31

----------------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

---------------------+------------------------------------------------

c.copper@race#region |

White#NE | 133.8487 2.644552 128.4551 139.2423

White#MW | 135.3951 1.914987 131.4895 139.3008

White#S | 135.4202 1.677846 131.9982 138.8422

White#W | 140.4265 2.395216 135.5414 145.3115

Black#NE | 149.2318 3.040079 143.0315 155.4321

Black#MW | 146.0234 3.755621 138.3638 153.683

Black#S | 156.3648 4.922914 146.3245 166.4052

Black#W | 145.7466 1.902169 141.8671 149.6261

Other#NE | 103.1149 5.877161 91.12837 115.1015

Other#MW | 123.9078 5.761588 112.1569 135.6586

Other#S | 136.9562 19.24526 97.70521 176.2071

Other#W | 120.8002 1.899352 116.9265 124.674

----------------------------------------------------------------------

In some situations, no standard error or confidence intervals will be given. This may be because there are very few observations at that level. To see if this is indeed the cause, we can use the estat size command. The unweighted number of cases is given in the column titled “Obs”, and the weighted (or estimated subpopulation size) is given in the column titled “Size”.

estat size

----------------------------------------------------------------------

| Linearized

Over | Mean std. err. Obs Size

-------------+--------------------------------------------------------

c.copper@|

race#region |

White#NE | 133.8487 2.644552 952 10,987,657

White#MW | 135.3951 1.914987 1,142 11,954,684

White#S | 135.4202 1.677846 1,099 12,006,761

White#W | 140.4265 2.395216 1,025 12,587,312

Black#NE | 149.2318 3.040079 52 576,302

Black#MW | 146.0234 3.755621 120 1,059,794

Black#S | 156.3648 4.922914 239 2,572,543

Black#W | 145.7466 1.902169 51 540,474

Other#NE | 103.1149 5.877161 6 89,269

Other#MW | 123.9078 5.761588 8 101,797

Other#S | 136.9562 19.24526 11 100,557

Other#W | 120.8002 1.899352 63 984,399

----------------------------------------------------------------------

The list command can be used to investigate combinations of subpopulation variables where the N is small.

The lincom command can be used to make comparisons between subpopulations. In the first example below, we will compare the means for males and females for iron. We can use the display command to see how the point estimate in the output of the lincom command is calculated. The value of using the lincom command is that the standard error of point estimate is also calculated, as well as the test statistic, p-value and 95% confidence interval. In the following examples, we will compare the iron level between males and females. Please see page 121 of the Stata 17 svy manual (first example in the section on survey post estimation) for more information on using lincom after the svy, subpop(): mean command.

svy, over(female): mean iron

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 10,337

Number of PSUs = 62 Population size = 117,023,659

Design df = 31

---------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

--------------+------------------------------------------------

c.iron@female |

Male | 104.8145 .5595657 103.6733 105.9558

Female | 97.17922 .6698075 95.81314 98.5453

---------------------------------------------------------------

We will need to add the coeflegend option to get Stata’s labels for the values we need.

svy, over(female): mean iron, coeflegend (running mean on estimation sample) Survey: Mean estimation Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 ------------------------------------------------------------------------------- | Mean Legend --------------+---------------------------------------------------------------- c.iron@female | Male | 104.8145 _b[c.iron@0bn.female] Female | 97.17922 _b[c.iron@1.female] ------------------------------------------------------------------------------- lincom _b[c.iron@0bn.female] - _b[c.iron@01.female] ( 1) c.iron@0bn.female - c.iron@1.female = 0 ------------------------------------------------------------------------------ Mean | Coefficient Std. err. t P>|t| [95% conf. interval] -------------+---------------------------------------------------------------- (1) | 7.635327 .7467989 10.22 0.000 6.112221 9.158434 ------------------------------------------------------------------------------display 104.8145 - 97.17922 // a little rounding error 7.63528

In the next example, we compare different regions with respect to mean of copper.

svy, over(region): mean copper

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 9,118

Number of PSUs = 62 Population size = 103,505,700

Design df = 31

-----------------------------------------------------------------

| Linearized

| Mean std. err. [95% conf. interval]

----------------+------------------------------------------------

c.copper@region |

NE | 122.5009 1.6006 119.2364 125.7653

MW | 123.5889 1.381478 120.7714 126.4064

S | 128.0201 1.162307 125.6495 130.3906

W | 124.3029 1.337444 121.5752 127.0306

-----------------------------------------------------------------

svy, over(region): mean copper, coeflegend

(running mean on estimation sample)

Survey: Mean estimation

Number of strata = 31 Number of obs = 9,118

Number of PSUs = 62 Population size = 103,505,700

Design df = 31

---------------------------------------------------------------------------------

| Mean Legend

----------------+----------------------------------------------------------------

c.copper@region |

NE | 122.5009 _b[c.copper@1bn.region]

MW | 123.5889 _b[c.copper@2.region]

S | 128.0201 _b[c.copper@3.region]

W | 124.3029 _b[c.copper@4.region]

---------------------------------------------------------------------------------

lincom _b[c.copper@4.region] - _b[c.copper@1bn.region] // West - NE

( 1) - c.copper@1bn.region + c.copper@4.region = 0

------------------------------------------------------------------------------

Mean | Coefficient Std. err. t P>|t| [95% conf. interval]

-------------+----------------------------------------------------------------

(1) | 1.802041 2.085828 0.86 0.394 -2.452033 6.056115

------------------------------------------------------------------------------

lincom _b[c.copper@4.region] - _b[c.copper@2.region] // West - MW

( 1) - c.copper@2.region + c.copper@4.region = 0

------------------------------------------------------------------------------

Mean | Coefficient Std. err. t P>|t| [95% conf. interval]

-------------+----------------------------------------------------------------

(1) | .7140072 1.922821 0.37 0.713 -3.207612 4.635626

------------------------------------------------------------------------------

We can use the svy: total command to get totals. The values in the output can get to be very large, so we can use the estimates table command to see them. We can also use the matrix list command.

svy: total diabetes (running total on estimation sample) Survey: Total estimation Survey: Total estimation Number of strata = 31 Number of obs = 10,335 Number of PSUs = 62 Population size = 116,997,257 Design df = 31 -------------------------------------------------------------- | Linearized | Total std. err. [95% conf. interval] -------------+------------------------------------------------ diabetes | 4011281 233292.8 3535477 4487085 -------------------------------------------------------------- estimates table, b(%15.2f) se(%13.2f) -------------------------------- Variable | Active -------------+------------------ diabetes | 4011281.00 | 233292.85 -------------------------------- Legend: b/se svy: total diabetes (running total on estimation sample) Survey: Total estimation Number of strata = 31 Number of obs = 10,335 Number of PSUs = 62 Population size = 116,997,257 Design df = 31 -------------------------------------------------------------- | Linearized | Total std. err. [95% conf. interval] -------------+------------------------------------------------ diabetes | 4011281 233292.8 3535477 4487085 -------------------------------------------------------------- matlist e(b), format(%15.2f) | diabetes -------------+----------------- y1 | 4011281.00 svy, over(female): total diabetes (running total on estimation sample) Survey: Total estimation Number of strata = 31 Number of obs = 10,335 Number of PSUs = 62 Population size = 116,997,257 Design df = 31 ------------------------------------------------------------------- | Linearized | Total std. err. [95% conf. interval] ------------------+------------------------------------------------ c.diabetes@female | Male | 1636958 159586.3 1311480 1962436 Female | 2374323 167874.1 2031941 2716705 ------------------------------------------------------------------- estimates table, b(%15.2f) se(%13.2f) -------------------------------- Variable | Active -------------+------------------ c.diabetes@| female | Male | 1636958.00 | 159586.34 Female | 2374323.00 | 167874.15 -------------------------------- Legend: b/seGraphing with continuous variables

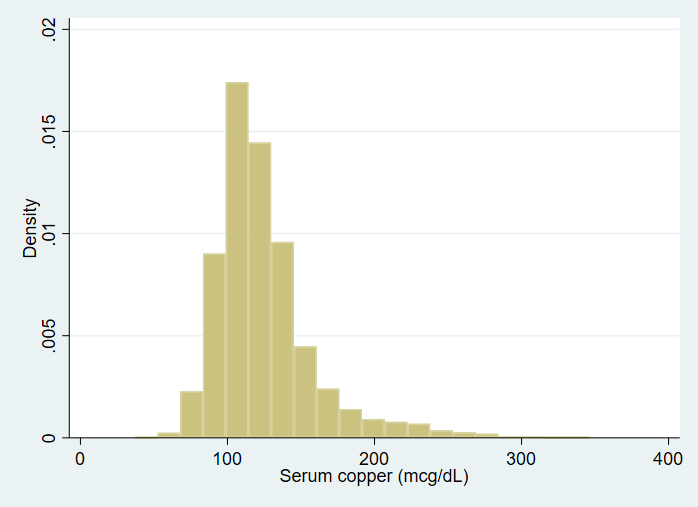

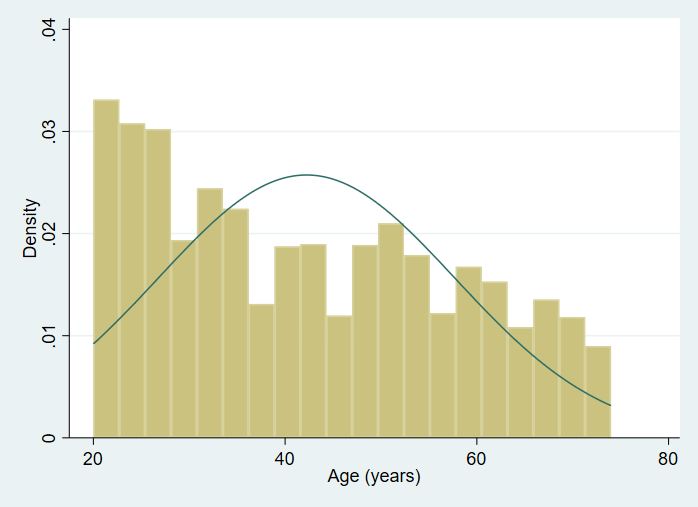

We can also get some descriptive graphs of our variables. For a continuous variable, you may want a histogram. However, the histogram command will only accept a frequency weight, which, by definition, can have only integer values. A suggestion by Heeringa, West and Berglund (2010, pages 121-122) is to simply use the integer part of the sampling weight. We can create a frequency weight from our sampling weight using the generate command with the int function.

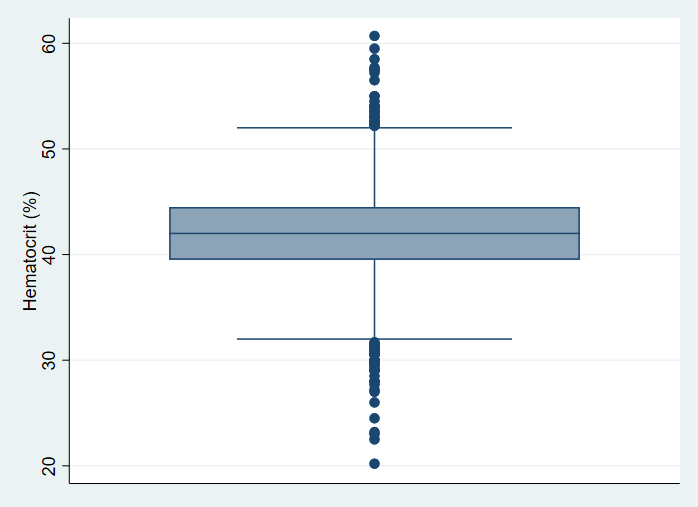

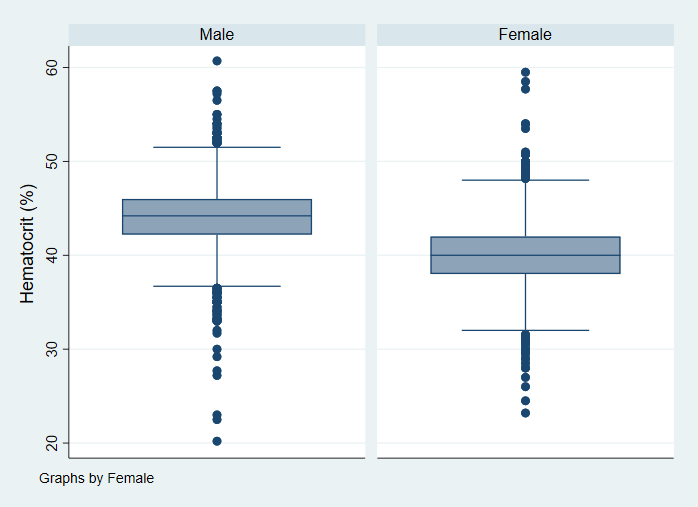

gen int_finalwgt = int(finalwgt) histogram copper [fw = int_finalwgt], bin(20)histogram age [fw = int_finalwgt], bin(20) normalWe can make box plots and use the sampling weight that is provided in the data set.

graph box hct [pw = finalwgt]graph box hct [pw = finalwgt], by(female)The line in the box plot represents the median, not the mean.

svy, over(female): mean hct (running mean on estimation sample) Survey: Mean estimation Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 -------------------------------------------------------------- | Linearized | Mean std. err. [95% conf. interval] -------------+------------------------------------------------ c.hct@female | Male | 44.21309 .0730382 44.06412 44.36205 Female | 39.92893 .0857013 39.75414 40.10372 -------------------------------------------------------------- estat sd ------------------------------------- Over | Mean Std. dev. -------------+----------------------- c.hct@female | Male | 44.21309 2.891824 Female | 39.92893 3.02211 -------------------------------------There is no survey command in Stata that will give us the median, but we can use a “hack” and specify the sampling weight as an analytic weight. (Please see https://www.stata.com/support/faqs/statistics/percentiles-for-survey-data/ for more information.)

bysort female: summarize hct [aw = finalwt], detail ---------------------------------------------------------------------------------------------------------------------------- -> female = Male Hematocrit (%) ------------------------------------------------------------- Percentiles Smallest 1% 37 20.2 5% 39.5 22.5 10% 40.7 23 Obs 4,909 25% 42.2 27.2 Sum of wgt. 56122035 50% 44.2 Mean 44.21309 Largest Std. dev. 2.906201 75% 46 57.2 90% 47.7 57.5 Variance 8.446005 95% 48.7 57.5 Skewness -.2544314 99% 51 60.7 Kurtosis 5.152102 ---------------------------------------------------------------------------------------------------------------------------- -> female = Female Hematocrit (%) ------------------------------------------------------------- Percentiles Smallest 1% 33 23.2 5% 35 24.5 10% 36.2 26 Obs 5,428 25% 38 27 Sum of wgt. 60901624 50% 40 Mean 39.92893 Largest Std. dev. 3.008737 75% 42 54 90% 43.7 57.7 Variance 9.052499 95% 45 58.5 Skewness .0781784 99% 47 59.5 Kurtosis 4.524747Relationships between two continuous variables

Let’s look at some bivariate relationships. As of Stata 17, there is not a convenience command to get correlations (but there is in SUDAAN 11).

To get a weighed correlation in Stata, we can use the svy: sem command with the standardize option. Clearly, this is not an intuitive way to get a correlation coefficient! The idea here is that a correlation is a standardized covariance estimate. One advantage to calculating the correlation coefficient this way is that the output contains not only the correlation coefficient and the associated p-value, but also the standard error. In the output shown below, we are interested only in the last line. The correlation coefficient is 0.0281931, and its standard error is 0.009628.

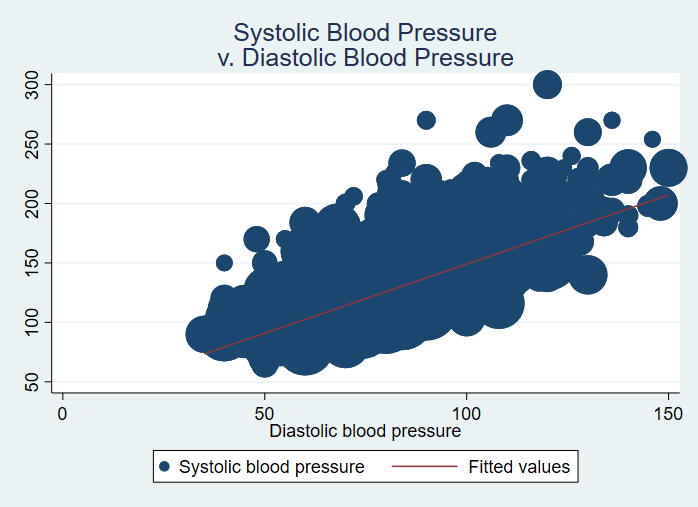

svy: sem (<- diabetes copper), standardize (running sem on estimation sample) Survey: Structural equation model Number of obs = 9,116 Number of strata = 31 Population size = 103,479,298 Number of PSUs = 62 Design df = 31 ------------------------------------------------------------------------------------- | Linearized Standardized | Coefficient std. err. t P>|t| [95% conf. interval] --------------------+---------------------------------------------------------------- mean(diabetes)| .1887943 .0059407 31.78 0.000 .1766782 .2009105 mean(copper)| 3.667598 .0656603 55.86 0.000 3.533683 3.801513 --------------------+---------------------------------------------------------------- var(diabetes)| 1 . . . var(copper)| 1 . . . --------------------+---------------------------------------------------------------- cov(diabetes,copper)| .0281931 .009628 2.93 0.006 .0085565 .0478296 ------------------------------------------------------------------------------------- svy: sem (<- bpsystol bpdiast), standardize (running sem on estimation sample) Survey: Structural equation model Number of obs = 10,337 Number of strata = 31 Population size = 117,023,659 Number of PSUs = 62 Design df = 31 -------------------------------------------------------------------------------------- | Linearized Standardized | Coefficient std. err. t P>|t| [95% conf. interval] ---------------------+---------------------------------------------------------------- mean(bpsystol)| 5.930388 .0823673 72.00 0.000 5.762398 6.098377 mean(bpdiast)| 6.331351 .1097803 57.67 0.000 6.107453 6.555249 ---------------------+---------------------------------------------------------------- var(bpsystol)| 1 . . . var(bpdiast)| 1 . . . ---------------------+---------------------------------------------------------------- cov(bpsystol,bpdiast)| .695335 .0073571 94.51 0.000 .6803301 .7103398 --------------------------------------------------------------------------------------The graphical version of a correlation is a scatterplot, which we show below. We will include the fit line.

svy: mean bpsystol bpdiast (running mean on estimation sample) Survey: Mean estimation Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 -------------------------------------------------------------- | Linearized | Mean std. err. [95% conf. interval] -------------+------------------------------------------------ bpsystol | 126.9476 .6040197 125.7157 128.1795 bpdiast | 81.02173 .5086788 79.98427 82.05918 -------------------------------------------------------------- twoway (scatter bpsystol bpdiast [pw = finalwgt]) (lfit bpsystol bpdiast [pw = finalwgt]), /// title("Systolic Blood Pressure" "v. Diastolic Blood Pressure")Descriptive statistics with categorical variables

Let’s get some descriptive statistics with categorical variables. We can use the svy: tabulate and svy: proportion commands. We will use the region variable, region, which has four levels. When used with no options, the output from the svy: tab command will give us the same information as the svy: proportion command.

svy: tab region (running tabulate on estimation sample) Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 ---------------------- Region | proportion ----------+----------- NE | .2063 MW | .2492 S | .2656 W | .2789 | Total | 1 ---------------------- Key: proportion = Cell proportion svy: proportion region (running proportion on estimation sample) Survey: Proportion estimation Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 -------------------------------------------------------------- | Linearized Logit | Proportion std. err. [95% conf. interval] -------------+------------------------------------------------ region | NE | .2063353 .0056671 .1950164 .2181331 MW | .249163 .0061898 .2367536 .2619997 S | .2655949 .0103955 .2449398 .2873289 W | .2789068 .0113228 .2564096 .3025747 --------------------------------------------------------------Let’s use some options with the svy: tab command so that we can see the estimated number of people in each category.

svy: tab region, count cellwidth(12) format(%12.2g) (running tabulate on estimation sample) (running tabulate on estimation sample) Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 ------------------------ Region | count ----------+------------- NE | 24146107 MW | 29157970 S | 31080884 W | 32638698 | Total | 117023659 ------------------------ Key: count = Weighted countThere are many options that can by used with svy: tab. Please see the Stata help file for the svy: tabulate command for a complete listing and description of each option. Only five items can be displayed at once, and the ci option counts as two items.

svy: tab region, cell count obs cellwidth(12) format(%12.2g) (running tabulate on estimation sample) Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 ---------------------------------------------------- Region | count proportion obs ----------+----------------------------------------- NE | 24146107 .21 2086 MW | 29157970 .25 2773 S | 31080884 .27 2853 W | 32638698 .28 2625 | Total | 117023659 1 10337 ---------------------------------------------------- Key: count = Weighted count proportion = Cell proportion obs = Number of observations svy: tab region, count se cellwidth(15) format(%15.2g) (running tabulate on estimation sample) Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 -------------------------------------------- Region | count se ----------+--------------------------------- NE | 24146107 569293 MW | 29157970 566351 S | 31080884 1508325 W | 32638698 1714567 | Total | 117023659 -------------------------------------------- Key: count = Weighted count se = Linearized standard error of weighted count svy: tab region, count deff deft cv cellwidth(12) format(%12.2g) (running tabulate on estimation sample) Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 -------------------------------------------- Region | count se ----------+--------------------------------- NE | 24146107 569293 MW | 29157970 566351 S | 31080884 1508325 W | 32638698 1714567 | Total | 117023659 -------------------------------------------- Key: count = Weighted count se = Linearized standard error of weighted countChi-square tests are provided by default when svy: tab is issued with two variables. You will usually want to use the design-based test.

The proportion of observations in each cell can be obtained using either the svy: tab or the svy: proportion command. If you want to compare the proportions within two cells, you can use the lincom command.

svy: tab agegrp female, cell obs count cellwidth(12) format(%12.2g) (running tabulate on estimation sample) Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 ---------------------------------------------------- | Female Age group | Male Female Total ----------+----------------------------------------- 20–29 | 15918998 16938699 32857697 | .14 .14 .28 | 1116 1204 2320 | 30–39 | 11777252 12150570 23927822 | .1 .1 .2 | 770 851 1621 | 40–49 | 9538701 10152242 19690943 | .082 .087 .17 | 610 660 1270 | 50–59 | 9210429 10337703 19548132 | .079 .088 .17 | 601 688 1289 | 60–69 | 7326861 8261777 15588638 | .063 .071 .13 | 1365 1487 2852 | 70+ | 2349794 3060633 5410427 | .02 .026 .046 | 447 538 985 | Total | 56122035 60901624 117023659 | .48 .52 1 | 4909 5428 10337 ---------------------------------------------------- Key: Weighted count Cell proportion Number of observations Pearson: Uncorrected chi2(5) = 6.7106 Design-based F(3.91, 121.15) = 1.2445 P = 0.2960 svy: proportion agegrp, over(female) (running proportion on estimation sample) Survey: Proportion estimation Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 --------------------------------------------------------------- | Linearized Logit | Proportion std. err. [95% conf. interval] --------------+------------------------------------------------ agegrp@female | 20–29 Male | .2836497 .0095365 .2646088 .3034953 20–29 Female | .2781321 .0085494 .2610357 .2958999 30–39 Male | .2098508 .0086259 .1927991 .2279846 30–39 Female | .1995114 .0059814 .1875917 .2119909 40–49 Male | .1699636 .0059311 .1582069 .1824046 40–49 Female | .166699 .0051213 .1565142 .1774073 50–59 Male | .1641143 .0070436 .1502492 .1789894 50–59 Female | .1697443 .0068912 .1561486 .1842653 60–69 Male | .1305523 .0051017 .120495 .1413141 60–69 Female | .1356577 .0040929 .1275246 .1442239 70+ Male | .0418694 .0029631 .0362262 .0483475 70+ Female | .0502554 .0038064 .0430362 .0586114 --------------------------------------------------------------- svy: proportion agegrp, over(female) coeflegend (running proportion on estimation sample) Survey: Proportion estimation Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 ------------------------------------------------------------------------------- | Proportion Legend --------------+---------------------------------------------------------------- agegrp@female | 20–29 Male | .2836497 _b[1bn.agegrp@0bn.female] 20–29 Female | .2781321 _b[1bn.agegrp@1.female] 30–39 Male | .2098508 _b[2.agegrp@0bn.female] 30–39 Female | .1995114 _b[2.agegrp@1.female] 40–49 Male | .1699636 _b[3.agegrp@0bn.female] 40–49 Female | .166699 _b[3.agegrp@1.female] 50–59 Male | .1641143 _b[4.agegrp@0bn.female] 50–59 Female | .1697443 _b[4.agegrp@1.female] 60–69 Male | .1305523 _b[5.agegrp@0bn.female] 60–69 Female | .1356577 _b[5.agegrp@1.female] 70+ Male | .0418694 _b[6.agegrp@0bn.female] 70+ Female | .0502554 _b[6.agegrp@1.female] ------------------------------------------------------------------------------- lincom _b[1bn.agegrp@0bn.female] - _b[1bn.agegrp@1.female] // (20-29 male) - (20-29 female) ( 1) 1bn.agegrp@0bn.female - 1bn.agegrp@1.female = 0 ------------------------------------------------------------------------------ Proportion | Coefficient Std. err. t P>|t| [95% conf. interval] -------------+---------------------------------------------------------------- (1) | .0055176 .0108797 0.51 0.616 -.0166718 .0277069 ------------------------------------------------------------------------------ display .2836497 - .2781321 .0055176Graphs with categorical variables

Let’s create a bar graph of the variable female. This graph will show the percent of observations that are female and male. To do this, we will need to create a new variable, which we will call male; it will be the opposite of female.

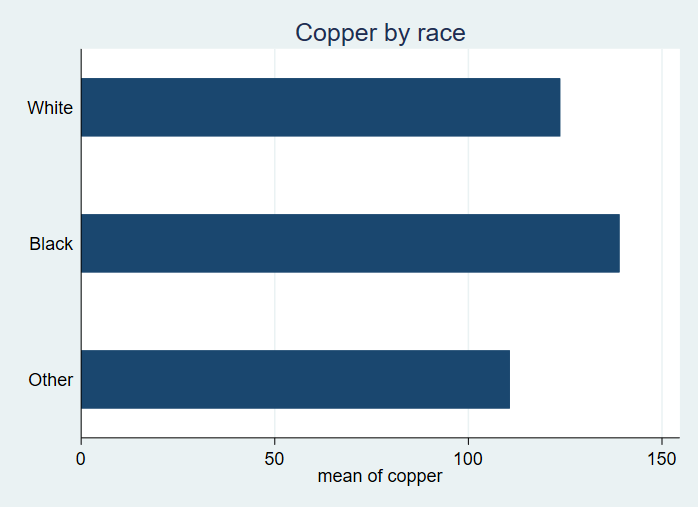

gen male = !female graph bar (mean) female male [pw = finalwgt], percentages bargap(7)We can also graph the mean of the variable copper by each level of race.

svy: mean copper, over(race) (running mean on estimation sample) Survey: Mean estimation Number of strata = 31 Number of obs = 9,118 Number of PSUs = 62 Population size = 103,505,700 Design df = 31 --------------------------------------------------------------- | Linearized | Mean std. err. [95% conf. interval] --------------+------------------------------------------------ c.copper@race | White | 123.7236 .6372616 122.4239 125.0233 Black | 139.0427 2.222075 134.5107 143.5746 Other | 110.714 1.075104 108.5213 112.9067 --------------------------------------------------------------- graph hbar copper [pw = finalwgt], over(race, gap(*2)) /// title("Copper by race")Finally, we will graph the mean of hct for each level of region.

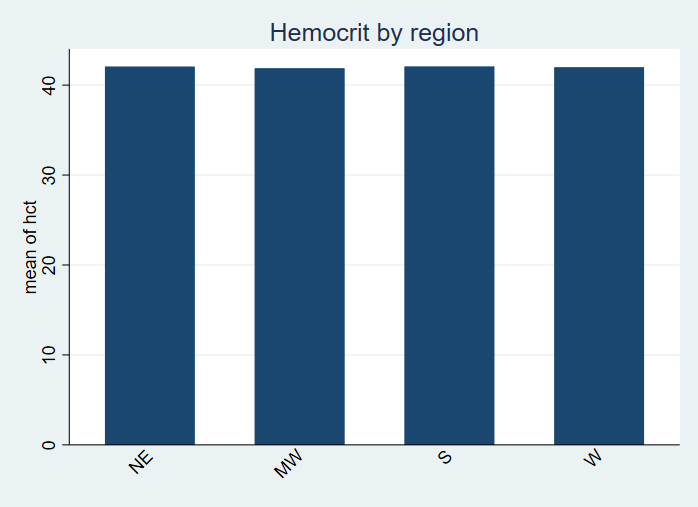

svy: mean hct, over(region) (running mean on estimation sample) Survey: Mean estimation Number of strata = 31 Number of obs = 10,337 Number of PSUs = 62 Population size = 117,023,659 Design df = 31 -------------------------------------------------------------- | Linearized | Mean std. err. [95% conf. interval] -------------+------------------------------------------------ c.hct@region | NE | 42.0485 .1610878 41.71996 42.37704 MW | 41.85997 .1606116 41.5324 42.18754 S | 42.05992 .1506741 41.75262 42.36722 W | 41.97306 .1361827 41.69532 42.25081 --------------------------------------------------------------graph bar hct [pw = finalwgt], over(region, label(angle(45))) /// title("Hemocrit by region")OLS regression

Now that we have descriptive statistics on our variables, we may want to run some inferential statistics, such as OLS regression or logistic regression. As before, we simply need to use the -svy- prefix before our regression commands.

Please note that the following analyses are shown only as examples of how to do these analyses in Stata. There was no attempt to create substantively meaningful models. Rather, the variables were chosen for illustrative purposes only. We do not recommend that researchers create their models this way.

We will start with an OLS regression with one categorical predictor (female). This is equivalent to a t-test. We will use the margins command get the means for each group. The vce(unconditional) option is used on all of the margins commands. This is the entry for this option from the Stata documentation:

vce(unconditional) specifies that the covariates that are not fixed be treated in a way that accounts for their having been sampled. The VCE is estimated using the linearization method. This method allows for heteroskedasticity or other violations of distributional assumptions and allows for correlation among the observations in the same manner as vce(robust) and vce(cluster…), which may have been specified with the estimation command. This method also accounts for complex survey designs if the data are svyset. See Obtaining margins with survey data and representative samples. When you use complex survey data, this method requires that the linearized variance estimation method be used for the model. See [SVY]svypostestimation for an example of margins with replication-based methods.

The coeflegend option is also used; this is a handy option that can be used with many Stata estimation commands and gives the labels that Stata assigns to these values. You use these labels to refer to the values when using commands such as lincom.

NOTE: If a regression is run on a subpopulation, the subpopulation must be specified as an option on the margins command if the desired output from margins is to the subpopulation.

svy: regress copper i.female (running regress on estimation sample) Survey: Linear regression Number of strata = 31 Number of obs = 9,118 Number of PSUs = 62 Population size = 103,505,700 Design df = 31 F(1, 31) = 613.75 Prob > F = 0.0000 R-squared = 0.1489 ------------------------------------------------------------------------------ | Linearized copper | Coefficient std. err. t P>|t| [95% conf. interval] -------------+---------------------------------------------------------------- female | Female | 26.26294 1.060099 24.77 0.000 24.10085 28.42502 _cons | 111.1328 .5685644 195.46 0.000 109.9732 112.2924 ------------------------------------------------------------------------------ margins female, vce(unconditional) Adjusted predictions Number of strata = 31 Number of obs = 9,118 Number of PSUs = 62 Population size = 103,505,700 Design df = 31 Expression: Linear prediction, predict() ------------------------------------------------------------------------------ | Linearized | Margin std. err. t P>|t| [95% conf. interval] -------------+---------------------------------------------------------------- female | Male | 111.1328 .5685644 195.46 0.000 109.9732 112.2924 Female | 137.3958 1.051281 130.69 0.000 135.2517 139.5399 ------------------------------------------------------------------------------ display 137.3958 - 111.1328 26.263 margins female, vce(unconditional) coeflegend post // the post option is necessary when using the coeflegend option Adjusted predictions Number of strata = 31 Number of obs = 9,118 Number of PSUs = 62 Population size = 103,505,700 Design df = 31 Expression: Linear prediction, predict() ------------------------------------------------------------------------------ | Margin Legend -------------+---------------------------------------------------------------- female | Male | 111.1328 _b[0bn.female] Female | 137.3958 _b[1.female] ------------------------------------------------------------------------------ lincom _b[1.female] - _b[0bn.female] ( 1) - 0bn.female + 1.female = 0 ------------------------------------------------------------------------------ | Coefficient Std. err. t P>|t| [95% conf. interval] -------------+---------------------------------------------------------------- (1) | 26.26294 1.060099 24.77 0.000 24.10085 28.42502 ------------------------------------------------------------------------------We can run the same anlaysis using the svy: mean and lincom commands.

svy, over(female): mean copper (running mean on estimation sample) Survey: Mean estimation Number of strata = 31 Number of obs = 9,118 Number of PSUs = 62 Population size = 103,505,700 Design df = 31 ----------------------------------------------------------------- | Linearized | Mean std. err. [95% conf. interval] ----------------+------------------------------------------------ c.copper@female | Male | 111.1328 .5685644 109.9732 112.2924 Female | 137.3958 1.051281 135.2517 139.5399 ----------------------------------------------------------------- svy, over(female): mean copper, coeflegend (running mean on estimation sample) Survey: Mean estimation Number of strata = 31 Number of obs = 9,118 Number of PSUs = 62 Population size = 103,505,700 Design df = 31 --------------------------------------------------------------------------------- | Mean Legend ----------------+---------------------------------------------------------------- c.copper@female | Male | 111.1328 _b[c.copper@0bn.female] Female | 137.3958 _b[c.copper@1.female] --------------------------------------------------------------------------------- lincom _b[c.copper@1.female] - _b[c.copper@0bn.female] ( 1) - c.copper@0bn.female + c.copper@1.female = 0 ------------------------------------------------------------------------------ Mean | Coefficient Std. err. t P>|t| [95% conf. interval] -------------+---------------------------------------------------------------- (1) | 26.26294 1.060099 24.77 0.000 24.10085 28.42502 ------------------------------------------------------------------------------Let’s return to running linear regression models. We will add a continuous predictor to our OLS regression, so now the model has one categorical predictor (female) and one continuous predictor (<bage). In the output we see both the number of observations used in and the estimate of the size of the population. We see the overall test of the model and the R-squared for the model. The R-squared is the population R-squared and can be thought of as the adjusted R-squared. We will follow the svy: regress command with the margins command, which gives us the predicted values for each level of female.

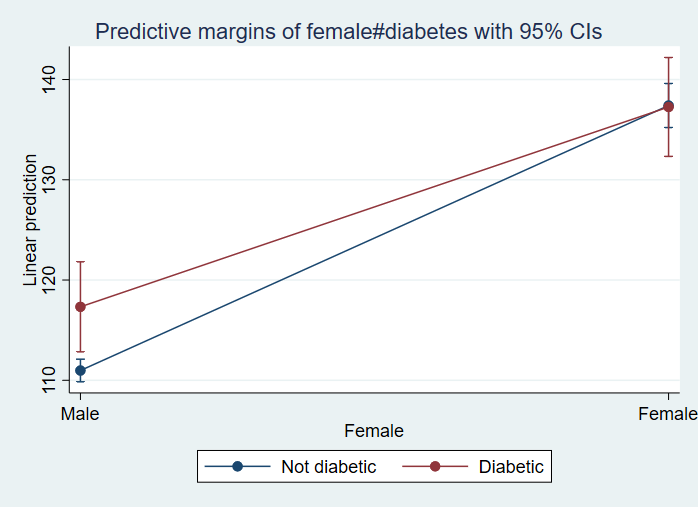

svy: regress copper i.female age (running regress on estimation sample) Survey: Linear regression Number of strata = 31 Number of obs = 9,118 Number of PSUs = 62 Population size = 103,505,700 Design df = 31 F(2, 30) = 448.23 Prob > F = 0.0000 R-squared = 0.1497 ------------------------------------------------------------------------------ | Linearized copper | Coefficient std. err. t P>|t| [95% conf. interval] -------------+---------------------------------------------------------------- female | Female | 26.20177 1.087402 24.10 0.000 23.984 28.41954 age | .0635486 .0330805 1.92 0.064 -.0039196 .1310168 _cons | 108.4748 1.540579 70.41 0.000 105.3327 111.6168 ------------------------------------------------------------------------------ margins female, vce(unconditional) Predictive margins Number of strata = 31 Number of obs = 9,118 Number of PSUs = 62 Population size = 103,505,700 Design df = 31 Expression: Linear prediction, predict() ------------------------------------------------------------------------------ | Linearized | Margin std. err. t P>|t| [95% conf. interval] -------------+---------------------------------------------------------------- female | Male | 111.1645 .5718949 194.38 0.000 109.9981 112.3309 Female | 137.3663 1.062916 129.24 0.000 135.1984 139.5341 ------------------------------------------------------------------------------Now let’s run a model with a categorical by categorical (specifically a binary by binary) interaction term. We can use the contrast command to get the test of the interaction displayed as an F-test rather than a t-statistic. Of course, the p-value is exactly the same as the one shown in the table of coefficients because the test of a binary by binary interaction is a one degree-of-freedom test. We can use the margins command to get the predicted values of copper for each combination of female and diabetes. The marginsplot command is used to obtain a graph of the interaction (marginsplot graphs the values shown in the output from the margins command).

svy: regress copper i.female##i.diabetes age (running regress on estimation sample) Survey: Linear regression Number of strata = 31 Number of obs = 9,116 Number of PSUs = 62 Population size = 103,479,298 Design df = 31 F(4, 28) = 315.94 Prob > F = 0.0000 R-squared = 0.1505 ---------------------------------------------------------------------------------- | Linearized copper | Coefficient std. err. t P>|t| [95% conf. interval] -----------------+---------------------------------------------------------------- female | Female | 26.42748 1.091601 24.21 0.000 24.20114 28.65381 | diabetes | Diabetic | 6.350642 2.034884 3.12 0.004 2.200469 10.50082 | female#diabetes | Female#Diabetic | -6.493926 2.706082 -2.40 0.023 -12.01302 -.9748357 | age | .0573067 .0332424 1.72 0.095 -.0104917 .1251051 _cons | 108.5489 1.542311 70.38 0.000 105.4034 111.6945 ---------------------------------------------------------------------------------- contrast female#diabetes, effects Contrasts of marginal linear predictions Design df = 31 Margins: asbalanced --------------------------------------------------- | df F P>F ----------------+---------------------------------- female#diabetes | 1 5.76 0.0226 Design | 31 --------------------------------------------------- Note: F statistics are adjusted for the survey design. ------------------------------------------------------------------------------------------------------ | Contrast Std. err. t P>|t| [95% conf. interval] -------------------------------------+---------------------------------------------------------------- female#diabetes | (Female vs base) (Diabetic vs base) | -6.493926 2.706082 -2.40 0.023 -12.01302 -.9748357 ------------------------------------------------------------------------------------------------------ margins female#diabetes, vce(unconditional) Predictive margins Number of strata = 31 Number of obs = 9,116 Number of PSUs = 62 Population size = 103,479,298 Design df = 31 Expression: Linear prediction, predict() -------------------------------------------------------------------------------------- | Linearized | Margin std. err. t P>|t| [95% conf. interval] ---------------------+---------------------------------------------------------------- female#diabetes | Male#Not diabetic | 110.9745 .5531673 200.62 0.000 109.8463 112.1027 Male#Diabetic | 117.3252 2.201312 53.30 0.000 112.8356 121.8148 Female#Not diabetic | 137.402 1.077068 127.57 0.000 135.2053 139.5987 Female#Diabetic | 137.2587 2.417731 56.77 0.000 132.3277 142.1897 -------------------------------------------------------------------------------------marginsplot Variables that uniquely identify margins: female diabetesSome of you may recognize this analysis as a “difference-in-differences” analysis. In other words, the term of interest is the binary-by-binary interaction term. Let’s reproduce the coefficient of this interaction term, -6.493926, by taking the difference of the differences ((male_no – male_yes) -(female_no – female_yes)). First, we need to need to rerun the margins command with the coeflegend option and the post option; the post option is needed so that we can access the values remembered by margins with another command. The output will show us the names of the values. We will use these names rather than the actual values themselves, so that if the data or model were changed, the code would not need to be modified. We will use the lincom command to do the math for us. The advantage of using the lincom command over the display command is that lincom will also produce the standard error.

margins female#diabetes, vce(unconditional) Predictive margins Number of strata = 31 Number of obs = 9,116 Number of PSUs = 62 Population size = 103,479,298 Design df = 31 Expression: Linear prediction, predict() -------------------------------------------------------------------------------------- | Linearized | Margin std. err. t P>|t| [95% conf. interval] ---------------------+---------------------------------------------------------------- female#diabetes | Male#Not diabetic | 110.9745 .5531673 200.62 0.000 109.8463 112.1027 Male#Diabetic | 117.3252 2.201312 53.30 0.000 112.8356 121.8148 Female#Not diabetic | 137.402 1.077068 127.57 0.000 135.2053 139.5987 Female#Diabetic | 137.2587 2.417731 56.77 0.000 132.3277 142.1897 --------------------------------------------------------------------------------------margins, vce(unconditional) coeflegend post Predictive margins Number of strata = 31 Number of obs = 9,116 Number of PSUs = 62 Population size = 103,479,298 Design df = 31 Expression: Linear prediction, predict() -------------------------------------------------------------------------------------- | Linearized | Margin std. err. t P>|t| [95% conf. interval] ---------------------+---------------------------------------------------------------- female#diabetes | Male#Not diabetic | 110.9745 .5531673 200.62 0.000 109.8463 112.1027 Male#Diabetic | 117.3252 2.201312 53.30 0.000 112.8356 121.8148 Female#Not diabetic | 137.402 1.077068 127.57 0.000 135.2053 139.5987 Female#Diabetic | 137.2587 2.417731 56.77 0.000 132.3277 142.1897 --------------------------------------------------------------------------------------lincom (_b[0bn.female#0bn.diabetes]-_b[0bn.female#1.diabetes]) - (_b[1.female#0bn.diabetes]-_b[1.female#1.diabetes]) ( 1) 0bn.female#0bn.diabetes - 0bn.female#1.diabetes - 1.female#0bn.diabetes + 1.female#1.diabetes = 0 ------------------------------------------------------------------------------ | Coefficient Std. err. t P>|t| [95% conf. interval] -------------+---------------------------------------------------------------- (1) | -6.493926 2.706082 -2.40 0.023 -12.01302 -.9748357 ------------------------------------------------------------------------------We can see that the output from the lincom command is the same as the output for the interaction term in the original regression command.

Looking back at the graph of the interaction, you may wonder if the two points for male are statistically significantly different from one another, and the same for the two points for females. The lincom command can be used for these comparisons.

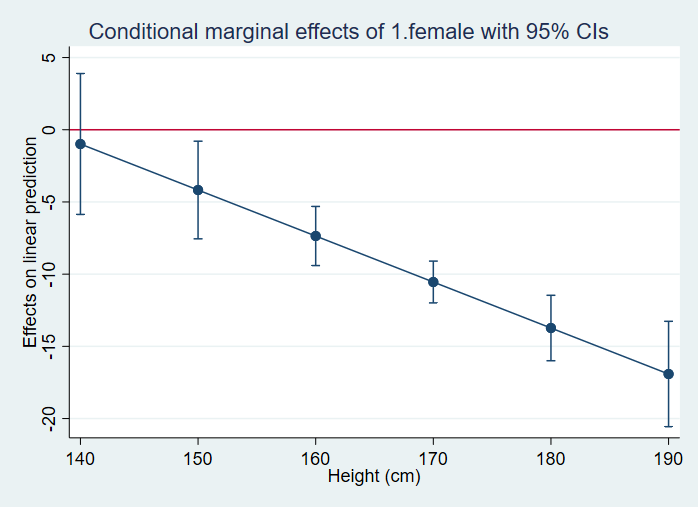

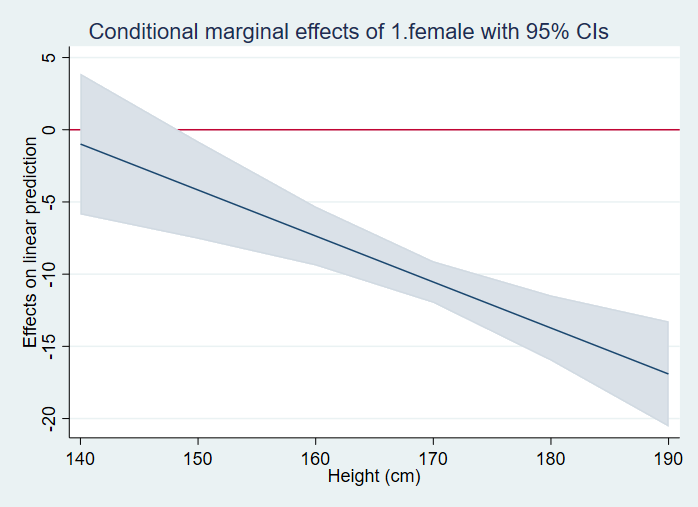

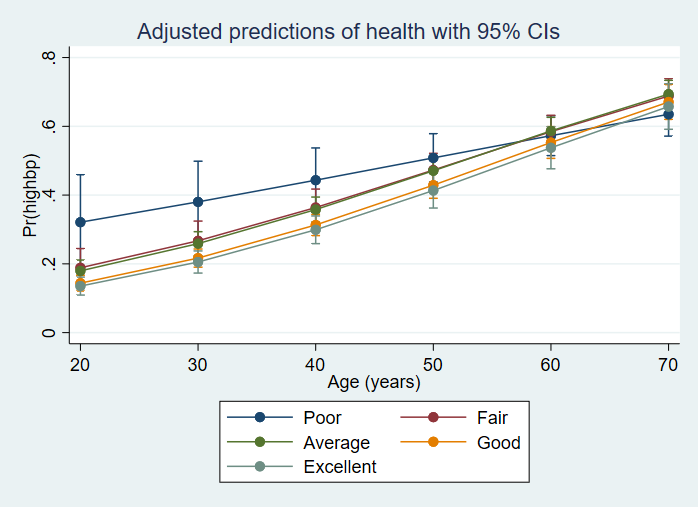

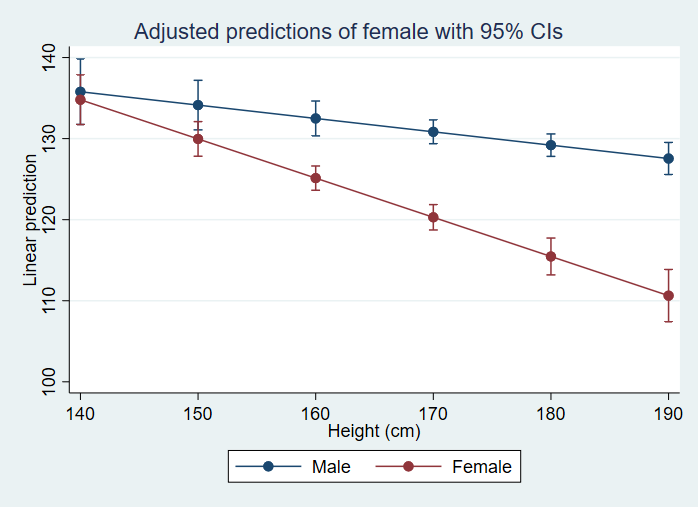

* males lincom (_b[0bn.female#0bn.diabetes]-_b[0bn.female#1.diabetes]) ( 1) 0bn.female#0bn.diabetes - 0bn.female#1.diabetes = 0 ------------------------------------------------------------------------------ | Coefficient Std. err. t P>|t| [95% conf. interval] -------------+---------------------------------------------------------------- (1) | -6.350642 2.034884 -3.12 0.004 -10.50082 -2.200469 ------------------------------------------------------------------------------ * females lincom (_b[1.female#0bn.diabetes]-_b[1.female#1.diabetes]) ( 1) 1.female#0bn.diabetes - 1.female#1.diabetes = 0 ------------------------------------------------------------------------------ | Coefficient Std. err. t P>|t| [95% conf. interval] -------------+---------------------------------------------------------------- (1) | .1432847 2.42126 0.06 0.953 -4.794907 5.081476 ------------------------------------------------------------------------------In the next example, we will use a categorical by continuous interaction. In the first call to the margins command, we get the simple slope coefficients for males and females. The p-values in this table tell us that both slopes are significantly different from 0. In the second call to the margins command, we get the predicted values for each level of female at height = 140, height = 150, height = 160, up to height = 190. The t-statistics and corresponding p-values tell us if the predicted value is different from 0.